Our papers are the official record of our discoveries. They allow others to build on and apply our work. Each one is the result of many months of research, so we make a special effort to make our papers clear, inspiring and beautiful, and publish them in leading journals.

- Date

- Subject

- Theme

- Journal

- Citations

- Altmetric

- SNIP

- Author

J. Wang

J. Wang T. Fink

T. Fink A. Stepanenko

A. Stepanenko M. Burtsev

M. Burtsev A. V. Kosyak

A. V. Kosyak Y. He

Y. He O. Gamayun

O. Gamayun E. Sobko

E. Sobko F. Sheldon

F. Sheldon F. Caravelli

F. Caravelli I. Shkredov

I. Shkredov A. Sarikyan

A. Sarikyan A. Esterov

A. Esterov A. Ochirov

A. Ochirov M. Reeves

M. Reeves G. Caldarelli

G. Caldarelli R. Hannam

R. Hannam A. Coolen

A. Coolen O. Dahlsten

O. Dahlsten A. Mozeika

A. Mozeika M. Bardoscia

M. Bardoscia P. Barucca

P. Barucca M. Rowley

M. Rowley I. Teimouri

I. Teimouri F. Antenucci

F. Antenucci A. Scala

A. Scala R. Farr

R. Farr A. Zegarac

A. Zegarac S. Sebastio

S. Sebastio B. Bollobás

B. Bollobás F. Lafond

F. Lafond D. Farmer

D. Farmer C. Pickard

C. Pickard T. Reeves

T. Reeves J. Blundell

J. Blundell A. Gallagher

A. Gallagher M. Przykucki

M. Przykucki P. Smith

P. Smith L. Pietronero

L. Pietronero

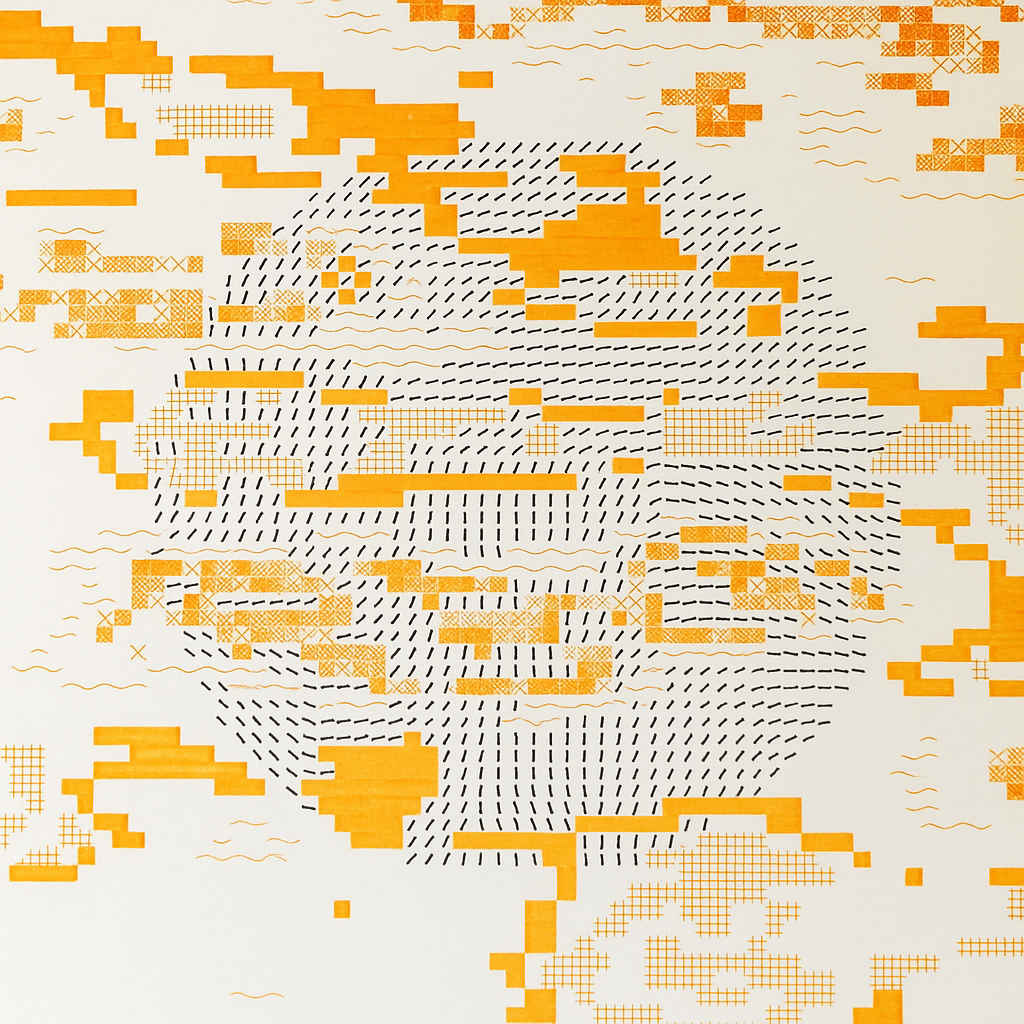

Machine learning

Boosting AI reasoning

By increasing the effective depth of neural networks, we improve their sequential reasoning abilities in tasks involving cellular automata.

Machine learning

Limits of attention

We demonstrate that transformer attention can only discriminate well at shorter context lengths, losing clarity as input length increases.

Machine learning

Multitasking memory

The abilities and power of a type of transformer model with memory are greatly improved by learning several key tasks at once during training.

Computational biology

Adaptability speeds evo

Based on computer simulations, we argue developmental plasticity accelerates evolution and drives organisms towards ever-greater complexity.

Machine learning

The limits of LLMs

Large language models like ChatGPT can generate human-like text but businesses that overestimate their abilities risk misusing the technology.

Machine learning

DeepPavlov dream

A new open-source platform is specifically tailored for developing complex dialogue systems, like generative conversational AI assistants.

Computational linguistics

Cross-lingual knowledge

Models trained on a Russian topical dataset, of knowledge-grounded human-human conversation, are capable of real-world tasks across languages.

Machine learning

Speaking DNA

A family of transformer-based DNA language models can interpret genomic sequences, opening new possibilities for complex biological research.

Machine learning

BERT enhanced with recurrence

The quadratic complexity of attention in transformers is tackled by combining token-based memory and segment-level recurrence, using RMT.

AI-assisted maths

Free energy and learning

Using the free energy principle to derive multiple theories of associative learning allows us to combine them into a single, unifying framework.